Do you want to get public data from a US-based website but get blocked whenever you try?

This is a common scenario and is often labeled as geo-blocking. It restricts you from accessing the data and blocks you from harvesting it for your benefit.

This discourse helps you understand how to get the public data you want: using a US proxy.

Read below to find out how:

Why Is It Difficult to Gather Data from Websites Based In the USA?

Imagine clicking on the link to find relevant information but being banned from the website to access it. Most of us experience this online, but we need help understanding the restrictions’ reasons.

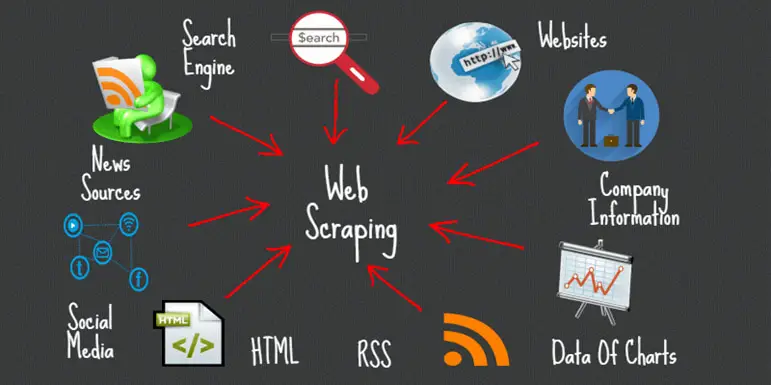

Gathering data from a USA-based website is a task, especially when you don’t live in the region. These websites often use technical measures to prevent public data collection and scraping.

Specific technical barriers, like IP blocking, session management, and CAPTCHAs, create hindrances between you and the website. Websites also put in geo-location barriers, prohibiting you from getting data from a particular area.

Collecting data from different websites can be very time-consuming, mainly when scattered. You may have to use several resources to pool the required information.

In addition to that, some platforms prohibit data scraping. It raises legal concerns and affects the legitimacy of your business and the data collected.

So, how can you get your desired data without getting into the bad books of web servers?

Is Web Scraping Legal in the US?

Web scraping is completely legal in the USA, but its ethical use depends on the data to be scraped, scraping methods and the purpose behind scraping.

For instance, if web scraping is used to copy or reproduce copyrighted content, it may be considered illegal. Similarly, web scraping on websites prohibiting this practice may raise ethical concerns.

The US data privacy law also prohibits web scraping sensitive data. Besides, it also punishes you when you use public data illegally.

Proxy Servers: A Hope for Businesses

Proxy servers are intermediaries or middlemen between a user and a web server. So, when a user sends a request to a web server, it first passes through a proxy server which then forwards it to the desired websites. When a website approves a request, it submits its response via a proxy to the user.

The proxy server gives you an anonymous IP address, saving your identity from the web server. As a result, you appear to be from the same area as the website.

This allows you to bypass any geo-restrictions and operate the website as a local. You benefit from gaining your required information without being a victim of IP blocking.

Proxy Servers And Web Scraping- An Incredible Bond

Proxy servers play a significant role in overcoming all the barriers between you and US-based websites. With the help of a US proxy, you can rotate your IP address, saving yourself from being blocked or detected by a website.

With the help of a proxy, you can hide your identity. This can help you scrap the web from any location without raising legal and ethical concerns.

A US proxy can also help you overcome geo-restrictions. The chances of getting blocked decrease manifold, allowing you to quickly gather information from multiple resources.

When you use a proxy server, you also benefit from low downtime, optimal performance, and improved network speed and connectivity while you perform web scraping.

Best Practices for Web Scraping US-based Websites

We have already discussed how a US proxy can help you perform scraping on a website.

Besides this method, there are some other practices as well that may prove helpful.

- You can adjust the request headers and make your requests to the web server appear legitimate and organic. This method saves you from getting blocked or your IP address from being identified.

- Rotating and using User-Agent is also a common technique used in web scraping. This method makes a web scraping request appear legitimate and prevents the detection of your true identity.

- Delayed requests can also be implemented to make scraping resemble normal human browsing behavior.

- You can also maintain information about sessions between requests, hiding your true motive behind simple internet browsing.

- When you set Referer headers in a request, it appears as if you are browsing from a new and acceptable website. This saves you from all the blocks and restrictions hindering your scraping activities.

Conclusion

Gone are the days when accessing a US-based website and scraping its data was impossible. Today, web scraping is as easy as browsing, courtesy of a US proxy.

You can use a US proxy to get data that you may want for your business. It helps bypass all the restrictions, allowing you to access information as required.

However, you must only use the proxy for legitimate purposes or suffer the consequences.